I spent my first day at GDC 2011 in the AI summit, but first I had breakfast with Susan Gold.

OBS, in this post the text may very well be incomprehensible. I'll try to write something understandable in a post where I summarise GDC. Here I am still processing impressions.

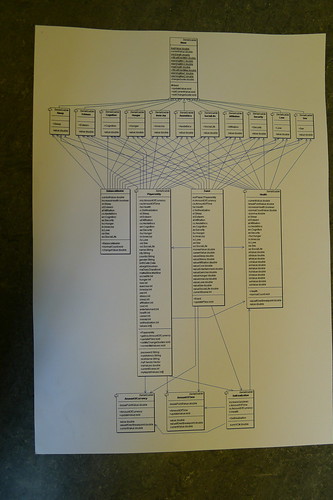

Creating Your Building Blocks: Modular Component AI Systems

Alex Champandard (AiGameDev.com), Brett Laming (Rockstar Leeds) and Joel McGinnis (CCP Games North America)

My favorite part of the talk was the table with different entities. I also appreciated the tips and tricks.

//Note to self: consider if approach applicable as blocks in OL

AI Pr0n: Maximum Exposure of Your Debug Info!

Brian Schwab (Blizzard Entertainment), Michael Dawe (Big Huge Games/38 Studios) and Rez Graham (Electronic Arts (Sims Division))

The strenght of this talk was the various examples shown – for each arugement we were shown how it would be applied, and how. And Brian said a good thing: don't ask what is wrong – ask why. Helps to better solve the problem.

Hm, I'm not sure if I should comment on the title of this talk, it has been a matter of debate on Twitter. What they meant with the title was pr0n like in food, or handbag or headphone pr0n so... no disappointement here oh no, definitely not!

Random notes:

Rez:

- meta autonomy

local autonomy (utility)

dev-tools in C#

Brian:

human brain is wired to see the change, the delta, not the full state. We want to see the delta. Deterministic simulations.

***

I had lunch with Ian Horsewill. He is, just as I am, interested in psychological simulations for interactive drama. (and fellow “have-to-take-photo-of-plate-before-i-eat”) We talked about eeg and noisiness, and he mentioned the P300 wave, which is surprise, and 300 comes from the time delay. Note to self: Check the emotiv sdk, and what about the neurosky? It would be great to add to the MM, since it is hard to manufacture potential situations of surprise. If detect P300 could be interesting. Hm, how measure. I can hardly surprise myself. Hmnh. Use friend, give strange gift?

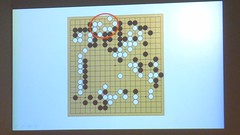

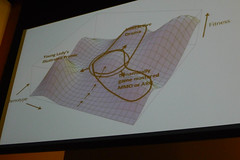

Lay of the Land: Smarter AI Through Influence Maps

Damian Isla (Moonshot Games), Alex Champandard (AiGameDev.com) and Kevin Dill (Lockheed Martin)

I love talks that somehow include spreading activation networks, so yes, this was a good one.

Note to self: Noah's comment on the otherness of the unseen process. Paul S's comment on the game == inteface. This a good example of a SAN that usually is an unseen process, applied so that it is represented. (memory ref: 2nd layer of OL is representation of the low level process). AlexC using it as authorial thing, saying “distance decay” coupling the decay with a spacial property, yeah, representation.

Kevin Dill: mobs with awareness of influence of other mobs. Enities working together, or against each other. //another take on what the SAN represents – loyalty levels between entities governing enity behavior.

Damian Isla: Spacial functions.

line of sight

distance

distance in path.

Showing a very nice illustrative series of visuals to demonstrate.

Staffing the Extras: Creating Convincing Background Character AI

Paul Kruszewski (Grip Entertainment) and Ben Sunshine-Hill (University of Pennsylvania)

Here's an AI LOD for charcter simulations. Very impressive.

Turing Tantrums: AI Developers Rant!

Dave Mark (Intrinsic Algorithm), Brian Schwab (Blizzard Entertainment), Richard Evans (Little Text People), Kevin Dill (Lockheed Martin)

This was a hilarious talk! Loved it. Anyone who has access to the GDC vault should save this for a rainy day and then use it to feel better.

After the talks several dinner-groups where formed, which then merged, and we all went together to eat mexican food.